Apr 17, 2024

Enabling secure and performant multi-tenant analytics for your PostgreSQL® deployments on Aiven's data platform.

Felix

Announcements

Mar 20, 2024

Aiven for Dragonfly delivers a 700% performance boost to scale with your enterprise needs

Jonah

Feb 28, 2024

Aiven's inaugural Customer Champion awards recognize companies for their remarkable achievements in innovation, excellence and global impact.

Ian

Nov 21, 2023

Integration of EverSQL’s AI-powered engine into the Aiven open source data platform will deliver new performance and cost optimization capabilities to customers

Hannu

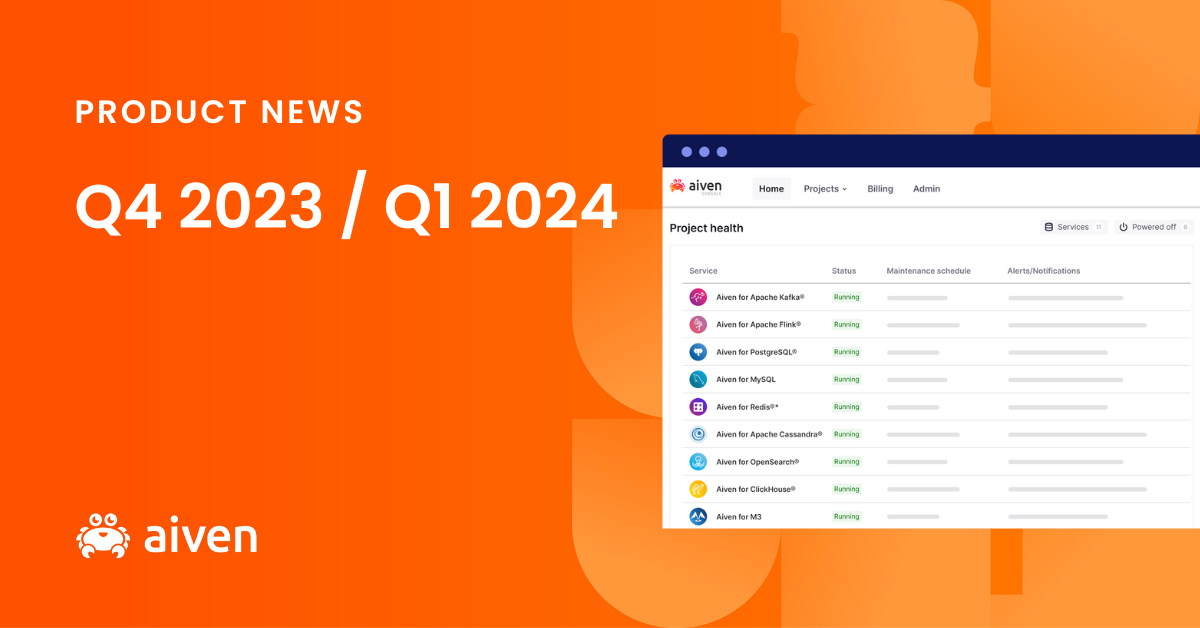

Product updates

Apr 9, 2024

Optimize data infrastructure with the power of AI. Upgrade open source data services and reduce costs with self-service BYOC on AWS.

Janki Patel Westenberg

Jan 18, 2024

Aiven for PostgreSQL® adds support for major version 16. Find out what the key improvements are and how you can get the new version.

Serhat

Dec 20, 2023

Aiven for Opensearch® is running OpenSearch® 2.11.1, bringing new AI capabilities, visual overhauls, and much more. Check out what's new and upgrade today.

Nick

Subscribe to the Aiven newsletter

All things open source, plus our product updates and news in a monthly newsletter.

Customer stories

Mar 25, 2024

SensorFlow’s Co-Founder and CTO on using technology to do more with less and why the platform approach will always win

Florian

Mar 22, 2024

Discover how Micah Lasseter, Director of Leadership and Management Development at Delivery Hero is creating a link between leadership, learning and diversity.

Florian

Mar 6, 2024

Discover how Doccla uses open source tech to transform healthcare with virtual wards, supported by Aiven, in the UK and Europe.

Florian

Events

Mar 7, 2024

Let Jonah give you his KubeCon EU 2024 recommendations, learn where you can meet with Aiven at the event, and more!

Jonah

Dec 15, 2023

Join us, together with Revenir, Dojo and Hookdeck as we explore how businesses are pursing innovation in order to stay ahead.

Cara

Nov 20, 2023

Following along with Aiven's desert dwelling DevRel, Jenn Junod, as she guides us through where you can find Aiven at AWS re:Invent 2023!

Jenn

Life at Aiven

Dec 11, 2023

Yen Pham has been with us for nearly two years. We caught up with her to discuss the facts, figures, and tides of financial life at Aiven.

Ola

Nov 2, 2023

Meet Galo Freile, a Commercial Account Executive based in Berlin. We learn about his journey to Aiven, a recent promotion and how he became our SDR of the year!

Jen

Oct 4, 2023

If you’re planning your next career move in tech, check out this interview with our VP Engineering in Product. It will be sure to point you in the right direction!

Ola

Latest articles

Apr 5, 2024

With Basel 3.1 aligned frameworks, governments worldwide are increasingly emphasising the need for financial institutions to maintain operational continuity.

Michael Coates

Mar 25, 2024

SensorFlow’s Co-Founder and CTO on using technology to do more with less and why the platform approach will always win

Florian

Mar 22, 2024

Discover how Micah Lasseter, Director of Leadership and Management Development at Delivery Hero is creating a link between leadership, learning and diversity.

Florian